How to use Cloud Storage from NodeJS? It’s actually easy once you found the libraries to use and a sample which works. But finding this was a long journey which took most of a day. Here was the most useful article of Sebastien having had the same problem. Shortly later I found this: https://googlecloudplatform.github.io/google-cloud-node/#/docs/storage/1.1.0/storage which helped a lot.

The short summary here in the hope that someone might read this and get a simple working example of NodeJS+Google Cloud Storage.

Pre-Requisite: Access

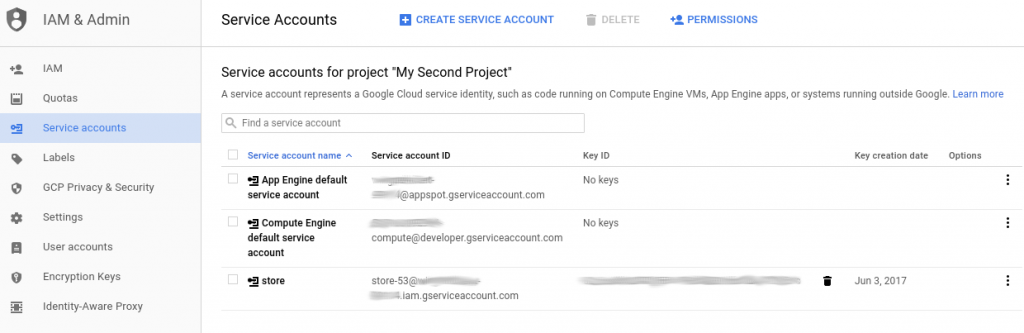

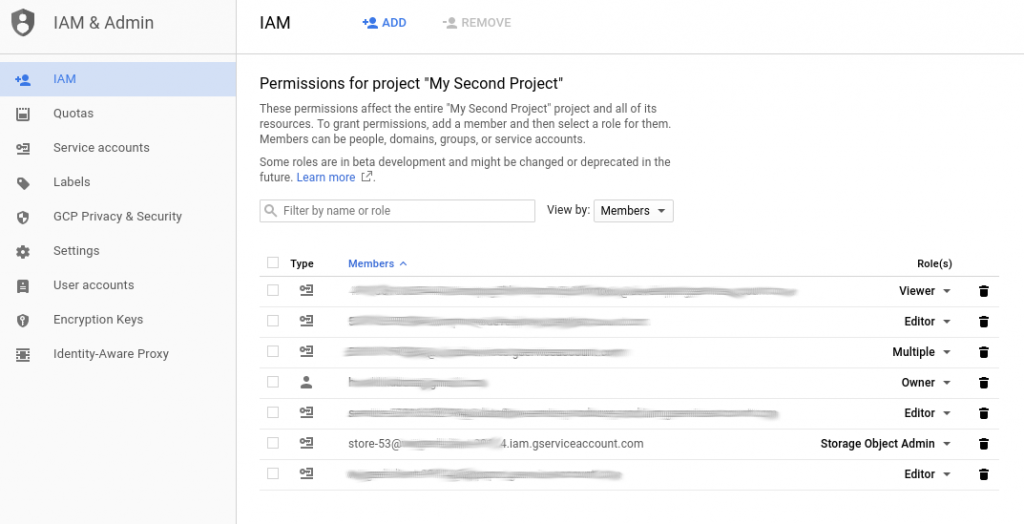

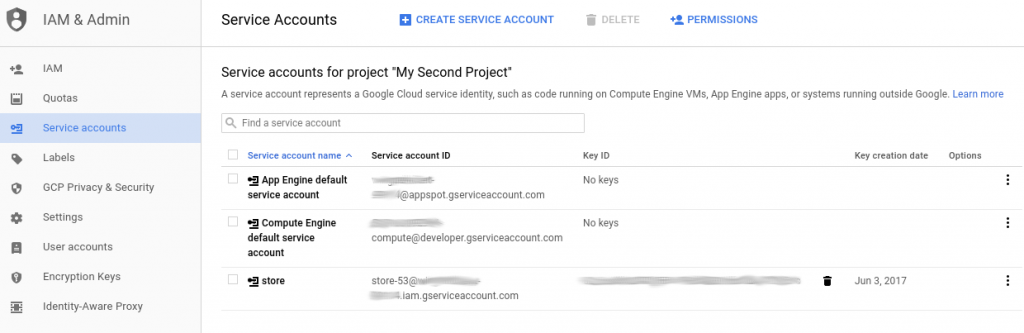

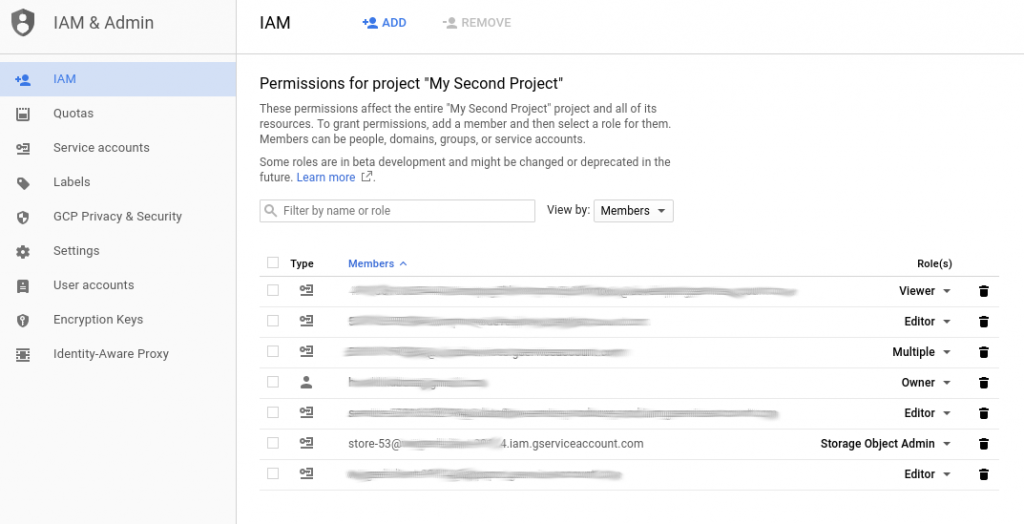

On the Google Cloud Console, go to IAM & Admin, create a Service Account, and create a key for it. Keep this key. It’s the JSON key in the keyFilename. This plus the projectID plus permissions is all you need to get access.

Under IAM you change the role of the new service account to Storage Admin to see all buckets. if you only want to connect to one bucket, use Storage Object Admin. Or less depending on what you need.

BTW updating permissions is almost instantly.

Task 1: See Buckets

This is the NodeJS program running on my home PC. I split it into 3 parts, but it’s really one file

The service accounts needs Storage Object Admin permississions for this.

var gcs = require('@google-cloud/storage')({

projectId: 'some-thing-12345',

keyFilename: './KEY.json' // absolute path ok too

});

// GOOGLE_APPLICATION_CREDENTIALS=$keyFilename was not needed

// contrary to some documentation

gcs.getBuckets(function(err, buckets) {

if (!err) {

buckets.forEach(function(value){

console.log("name: "+value.metadata.name);

console.log("location: "+value.metadata.location);

});

} else {

console.log(err);

}

});

2. Look Inside Buckets

Service account needs Storage Object Viewer (read-only) resp. Creator (read/write).

// List contents of bucket 'some-test-1'

var wt1 = gcs.bucket('some-test-1');

wt1.getFiles(function(err, files) {

if (!err) {

files.forEach(x=>{console.log("Name: "+x.name)});

// files is an array of File objects.

} else {

console.log(err);

}

});

3. Access Files in a Bucket

Service account needs Storage Object Viewer (read-only) resp. Creator (read/write)

// Download a file (resp. create a read-stream)

var fs = require('fs');

var remoteFile = wt1.file('f1/a1.sh');

var localFilename = 'test-a1.sh';

remoteFile.createReadStream()

.on('error', function(err) {

console.log(err);

})

.on('response', function(response) {

// Server connected and responded with the specified status and headers.

})

.on('end', function() {

// The file is fully downloaded.

})

.pipe(fs.createWriteStream(localFilename));