From CA to TK

Moving Docker container is supposed to be easy, but when doing a move, why not clean up, modernize and improve? Which of course makes such a move as difficult as any non-Docker move.

I moved several containers/services by literally copying the directory with the docker-compose.yml file in it. That same directory has all the mount points for the Docker images, so moving is as simple as

On the old VM:

ssh OLD_HOST 'tar cf - DIR_NAME' | tar xfv -

which, if you got the permissions, works like a charm. If you don't have the permissions to tar up the old directory (e.g. root owned files which are only root-readable, e.g. private keys). If you don have the permissions, then execute this (the tar as well as the un-tar) as root.

Then a

docker-compose up -d

and all is running now and will continue to run in case of a reboot.

Mail

For mail I wanted to go away from the home-made postfix-dovecot container I created a long time ago: with the constant thread of security issues, maintenance and updates are getting mandatory. Also I had no spam filter included which back then was less of a problem than it is now. So I was looking for a simpler to maintain mail solution. I would not have minded to pay for a commercial one. Most commercial email hosting companies are totally oversized for my needs though, but at the same time I have to host 2 or 3 DNS domains which often is not part of the smallest offering.

My requirements were modest:

-

2 or 3 DNS domains to host, with proper MX records

-

IMAP4 and SMTP

-

web mailer frontend for those times I cannot use my phone

-

TLS everywhere with no certificate warnings (e.g. self-signed certificates) for SMTP, IMAP4 and webmail

-

2 users minimum, unlikely ever more than 5

-

Aliases from the usual suspects (info, postmaster)

-

Some anti-spam solution

In the end I decided to do self-hosting again, if only to not forget how this all works. Here is the docker-compose.yml file:

version: '3'

services:

mailserver:

image: analogic/poste.io

volumes:

- /home/USER_NAME/mymailserver/data:/data

- /etc/localtime:/etc/localtime:ro

ports:

- "25:25"

- "8080:80"

- "110:110"

- "143:143"

- "8443:443"

- "465:465"

- "587:587"

- "993:993"

- "995:995"

restart: always

You will have to configure the users and domains once incl. uploading the certificate (one certificate with two alternative names for 2 DNS domains). Also DKIM records (handled by poste.io), SPF (manual) and updating the MX records. It worked flawlessly!

Updating the Let's Encrypt certificate is not difficult: since all files are in the /data directory, updating those from outside the container is simple. It does need a restart of the container though.

One issue though:

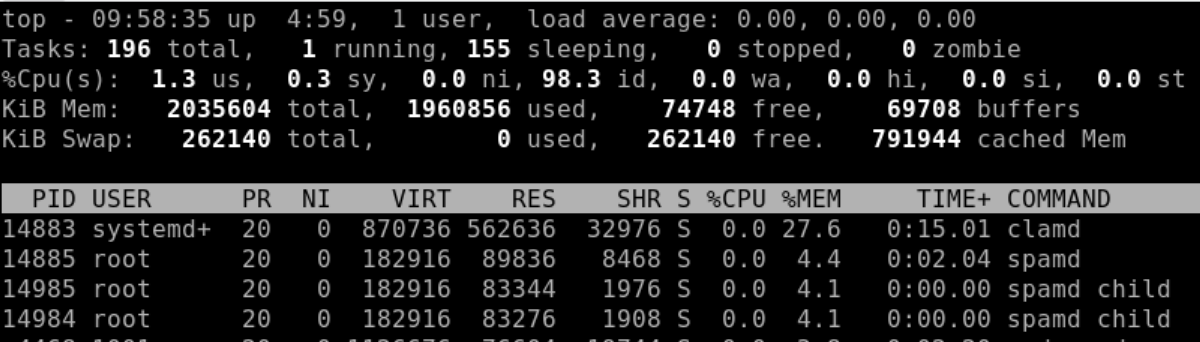

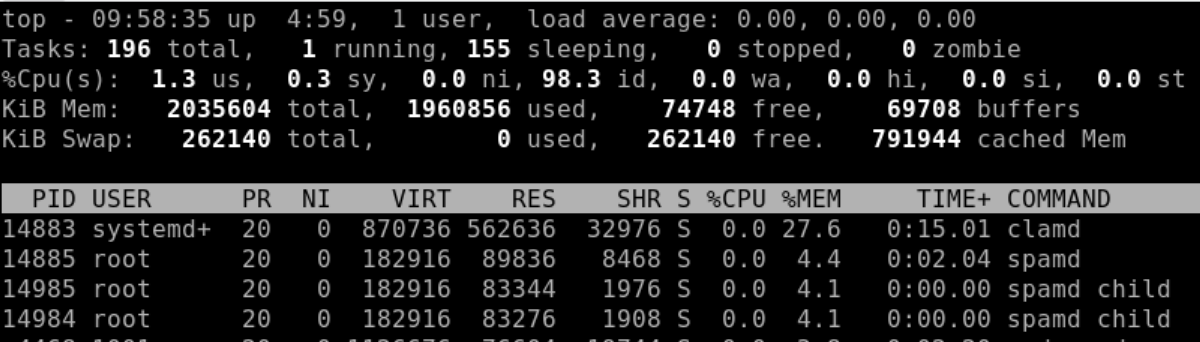

As you can see, quite a lot of memory is used: 27.6% of a 2 GB RAM VM. The small VM I started with had only 1 GB RAM, and while all was running, it was very low on free memory and had to use swap. That's the only drawback of this Docker image: you cannot turn off ClamAV. However maybe that's ok since viruses and malware are a real problem and this helps to contain it.